Coco dataset citation information

Home » Trending » Coco dataset citation informationYour Coco dataset citation images are ready. Coco dataset citation are a topic that is being searched for and liked by netizens now. You can Get the Coco dataset citation files here. Get all royalty-free photos and vectors.

If you’re searching for coco dataset citation pictures information connected with to the coco dataset citation interest, you have pay a visit to the ideal blog. Our website always gives you suggestions for refferencing the highest quality video and picture content, please kindly surf and locate more enlightening video content and graphics that match your interests.

Coco Dataset Citation. (c) the performance of a modern semantic segmentation method on. • updated a year ago (version 2) data code (34) discussion activity metadata. Author�s for releasing their opensource codes. With a total of 2.5 million labeled instances in 328k images, the creation of our dataset drew upon extensive crowd worker involvement via novel user interfaces for category detection, instance spotting and instance segmentation.

The dataset is based on the ms coco dataset, which contains images of complex. These datasets are collected by asking human raters to disambiguate objects delineated by bounding boxes in the coco dataset. With a total of 2.5 million labeled instances in 328k images, the creation of our dataset drew upon extensive crowd worker involvement via novel user interfaces for category detection, instance spotting and instance segmentation. The converted dataset generated was generated as a part of this notebook. Nvidia for donating gpus used in this research. A collection of 3 referring expression datasets based off images in the coco dataset.

1) updated annotation pipeline description and figures;

The dataset is based on the ms coco dataset, which contains images of complex. The fast.ai subset contains all images that contain. Details of each coco dataset is available from the coco dataset page. • updated a year ago (version 2) data code (34) discussion activity metadata. Provided here are all the files from the 2017 version, along with an additional subset dataset created by fast.ai. The tags are , , , , , , , , , , ,.

Source: researchgate.net

Source: researchgate.net

The developers of different deep learning frameworks (torch, caffe, tensorflow). Specifically, neuraltalk, vqa_lstm_cnn, hiecoattenvqa and bidirectional image captioning. The fast.ai subset contains all images that contain. Our dataset contains photos of 91 objects types that would be easily recognizable by a 4 year old. (1) we sample a few images per class from biggan and manually annotate them with masks.

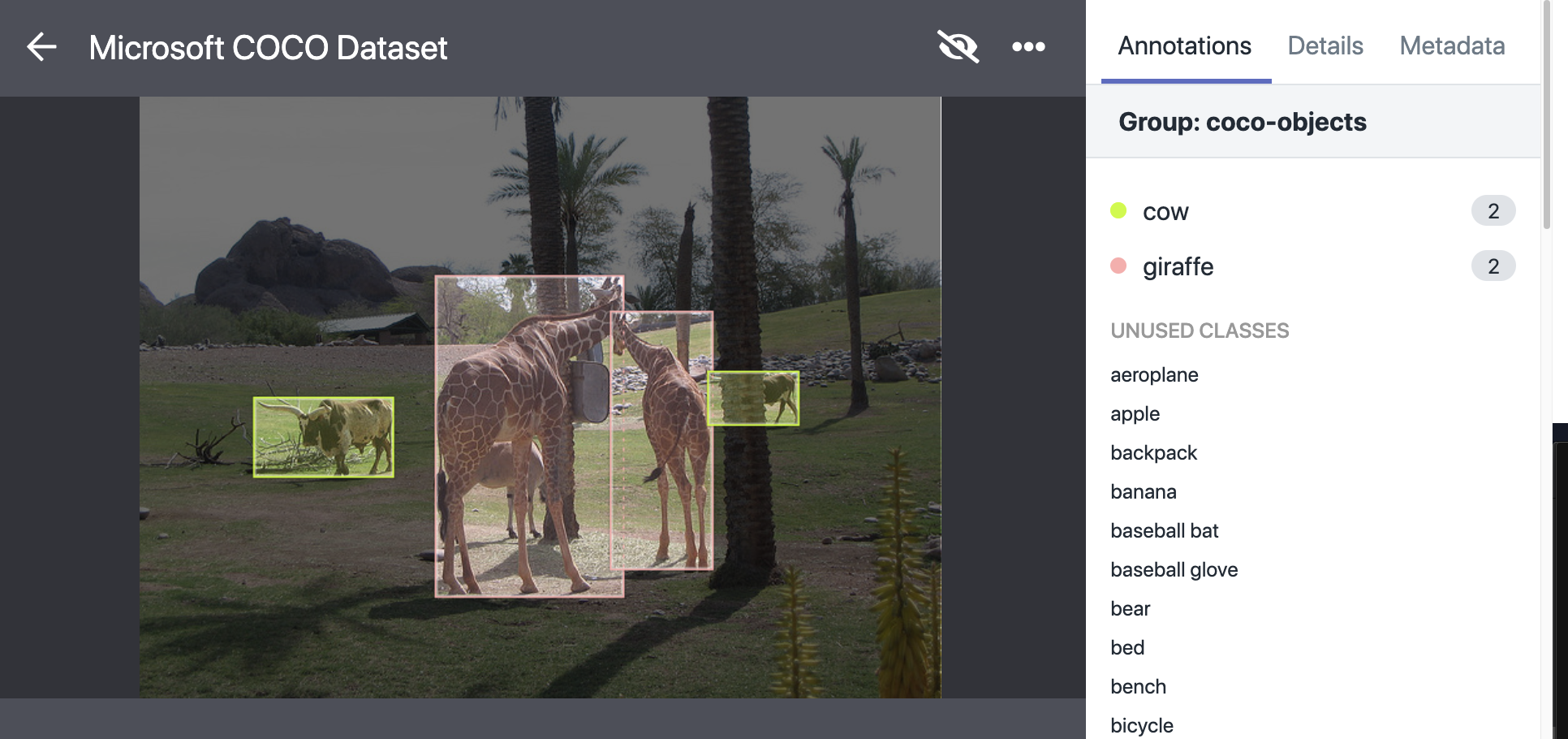

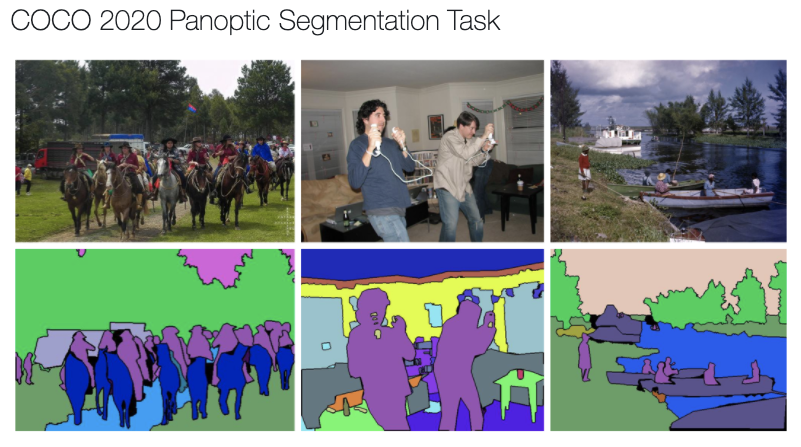

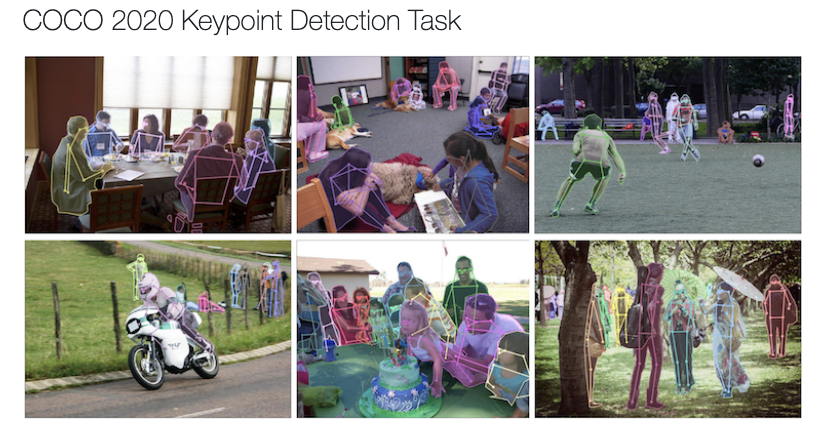

Source: blog.roboflow.com

Source: blog.roboflow.com

(c) the performance of a modern semantic segmentation method on. Details of each coco dataset is available from the coco dataset page. (1) we sample a few images per class from biggan and manually annotate them with masks. • updated a year ago (version 2) data code (34) discussion activity metadata. (c) the performance of a modern semantic segmentation method on.

These datasets are collected by asking human raters to disambiguate objects delineated by bounding boxes in the coco dataset. The tags are , , , , , , , , , , ,. This dataset contains annotations and images of the vinbigdata chest abnormalities competition in coco format with weighted boxes fusion technique applied to select or fuse multiple bboxes of the same chest abnormality, annotated by several radiologists. (1) we sample a few images per class from biggan and manually annotate them with masks. Converts your object detection dataset into a classification dataset csv.

Source: researchgate.net

Source: researchgate.net

A collection of 3 referring expression datasets based off images in the coco dataset. Our dataset contains photos of 91 objects types that would be easily recognizable by a 4 year old. * coco 2014 and 2017 uses the same images, but different train/val/test splits * the test split don�t have any annotations (only images). A collection of 3 referring expression datasets based off images in the coco dataset. (a) the importance of stuff and thing classes in terms of their surface cover and how frequently they are mentioned in image captions;

The converted dataset generated was generated as a part of this notebook. Provided here are all the files from the 2017 version, along with an additional subset dataset created by fast.ai. The tags are , , , , , , , , , , ,. Nvidia for donating gpus used in this research. @article {gupta2015visual, title= {visual semantic role labeling}, author= {gupta, saurabh and malik, jitendra}, journal= {arxiv preprint arxiv:1505.04474}, year= {2015} } @incollection {lin2014microsoft, title= {microsoft coco:

Source: researchgate.net

Source: researchgate.net

If you find this dataset or code base useful in your research, please consider citing the following papers: Our dataset contains photos of 91 objects types that would be easily recognizable by a 4 year old. Our dataset contains photos of 91 objects types that would be easily recognizable by a 4 year old. 1) updated annotation pipeline description and figures; With a total of 2.5 million labeled instances in 328k images, the creation of our dataset drew upon extensive crowd worker involvement via novel user interfaces for category detection, instance spotting and instance segmentation.

Source: researchgate.net

Source: researchgate.net

@article {gupta2015visual, title= {visual semantic role labeling}, author= {gupta, saurabh and malik, jitendra}, journal= {arxiv preprint arxiv:1505.04474}, year= {2015} } @incollection {lin2014microsoft, title= {microsoft coco: Refcoco and refcoco+ are from kazemzadeh et al. (1) we sample a few images per class from biggan and manually annotate them with masks. Author�s for releasing their opensource codes. The developers of different deep learning frameworks (torch, caffe, tensorflow).

Source: researchgate.net

Source: researchgate.net

Specifically, neuraltalk, vqa_lstm_cnn, hiecoattenvqa and bidirectional image captioning. Converts your object detection dataset into a classification dataset csv. There are four structural bridge details labeled in this dataset: (c) the performance of a modern semantic segmentation method on. For reproducing experiments) section 4.1:

Source: researchgate.net

Source: researchgate.net

Details of each coco dataset is available from the coco dataset page. Dog, unmixed version) code for distribution shift experiments; The tags are , , , , , , , , , , ,. * coco defines 91 classes but the data only uses 80. (3) we sample large synthetic datasets from biggan & vqgan.

Source: gitcode.net

Source: gitcode.net

(c) the performance of a modern semantic segmentation method on. * coco 2014 and 2017 uses the same images, but different train/val/test splits * the test split don�t have any annotations (only images). Constructing metashift from coco dataset. Refcoco and refcoco+ are from kazemzadeh et al. This dataset contains a total of 800 vhr optical remote sensing images, where 715 color images were acquired from google earth with the spatial resolution ranging from 0.5 to 2 m, and 85 pansharpened color infrared images were acquired from vaihingen data with.

Source: blog.roboflow.com

Source: blog.roboflow.com

- coco defines 91 classes but the data only uses 80. * coco 2014 and 2017 uses the same images, but different train/val/test splits * the test split don�t have any annotations (only images). (1) we sample a few images per class from biggan and manually annotate them with masks. These datasets are collected by asking human raters to disambiguate objects delineated by bounding boxes in the coco dataset. Details of each coco dataset is available from the coco dataset page.

Source: researchgate.net

Source: researchgate.net

(c) the performance of a modern semantic segmentation method on. The developers of different deep learning frameworks (torch, caffe, tensorflow). Nvidia for donating gpus used in this research. 1) updated annotation pipeline description and figures; If you find this dataset or code base useful in your research, please consider citing the following papers:

Source: researchgate.net

Source: researchgate.net

(b) the spatial relations between stuff and things, highlighting the rich contextual relations that make our dataset unique; Author�s for releasing their opensource codes. A custom csv format used by keras implementation of retinanet. * coco defines 91 classes but the data only uses 80. (1) we sample a few images per class from biggan and manually annotate them with masks.

Source: researchgate.net

(2) we train a feature interpreter branch on top of biggan�s and vqgan�s features on this data, turning these gans into generators of labeled data. The dataset is based on the ms coco dataset, which contains images of complex. Our dataset contains photos of 91 objects types that would be easily recognizable by a 4 year old. A custom csv format used by keras implementation of retinanet. With a total of 2.5 million labeled instances in 328k images, the creation of our dataset drew upon extensive crowd worker involvement via novel user interfaces for category detection, instance spotting and instance segmentation.

Source: researchgate.net

Source: researchgate.net

Details of each coco dataset is available from the coco dataset page. For reproducing experiments) section 4.1: [bearings, cover plate terminations, gusset plate connections, and out of plane stiffeners]. Constructing metashift from coco dataset. Author�s for releasing their opensource codes.

Source: researchgate.net

- updated annotation pipeline description and figures; * coco 2014 and 2017 uses the same images, but different train/val/test splits * the test split don�t have any annotations (only images). (3) we sample large synthetic datasets from biggan & vqgan. * some images from the train and validation sets don�t have annotations. With a total of 2.5 million labeled instances in 328k images, the creation of our dataset drew upon extensive crowd worker involvement via novel user interfaces for category detection, instance spotting and instance segmentation.

Source: researchgate.net

Source: researchgate.net

A custom csv format used by keras implementation of retinanet. For reproducing experiments) section 4.1: This version contains images, bounding boxes, labels, and captions from coco 2014, split into the subsets defined by karpathy and li (2015). If you find this dataset or code base useful in your research, please consider citing the following papers: * coco defines 91 classes but the data only uses 80.

Source: blog.roboflow.com

Source: blog.roboflow.com

These datasets are collected by asking human raters to disambiguate objects delineated by bounding boxes in the coco dataset. (3) we sample large synthetic datasets from biggan & vqgan. Download (28 gb) new notebook. These datasets are collected by asking human raters to disambiguate objects delineated by bounding boxes in the coco dataset. Specifically, neuraltalk, vqa_lstm_cnn, hiecoattenvqa and bidirectional image captioning.

This site is an open community for users to do sharing their favorite wallpapers on the internet, all images or pictures in this website are for personal wallpaper use only, it is stricly prohibited to use this wallpaper for commercial purposes, if you are the author and find this image is shared without your permission, please kindly raise a DMCA report to Us.

If you find this site value, please support us by sharing this posts to your own social media accounts like Facebook, Instagram and so on or you can also bookmark this blog page with the title coco dataset citation by using Ctrl + D for devices a laptop with a Windows operating system or Command + D for laptops with an Apple operating system. If you use a smartphone, you can also use the drawer menu of the browser you are using. Whether it’s a Windows, Mac, iOS or Android operating system, you will still be able to bookmark this website.

Category

Related By Category

- Easybib chicago citation information

- Doi to apa citation machine information

- Citation x poh information

- Cpl kyle carpenter medal of honor citation information

- Goethe citation dieu information

- Exact citation apa information

- Citation une impatience information

- Fitzgerald way out there blue citation information

- Contre le racisme citation information

- Friedrich nietzsche citaat grot information