Cost sensitive learning methods for imbalanced data citation information

Home » Trending » Cost sensitive learning methods for imbalanced data citation informationYour Cost sensitive learning methods for imbalanced data citation images are available. Cost sensitive learning methods for imbalanced data citation are a topic that is being searched for and liked by netizens now. You can Download the Cost sensitive learning methods for imbalanced data citation files here. Find and Download all royalty-free photos.

If you’re looking for cost sensitive learning methods for imbalanced data citation pictures information related to the cost sensitive learning methods for imbalanced data citation interest, you have pay a visit to the right blog. Our website frequently provides you with hints for downloading the highest quality video and image content, please kindly hunt and locate more informative video articles and images that match your interests.

Cost Sensitive Learning Methods For Imbalanced Data Citation. About about core blog contact us. The insight gained from a comprehensive analysis of the adaboost. Pdf | on dec 19, 2018, akbar khan published cost sensitive learning and smote methods for imbalanced data | find, read and cite all the research you need on researchgate When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high.

(PDF) Performance analysis of costsensitive learning From researchgate.net

(PDF) Performance analysis of costsensitive learning From researchgate.net

When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. Although researchers have introduced many methods. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. This approach is at the data level without changing the underlying learning algorithms. Pdf | on dec 19, 2018, akbar khan published cost sensitive learning and smote methods for imbalanced data | find, read and cite all the research you need on researchgate When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high.

Although researchers have introduced many methods.

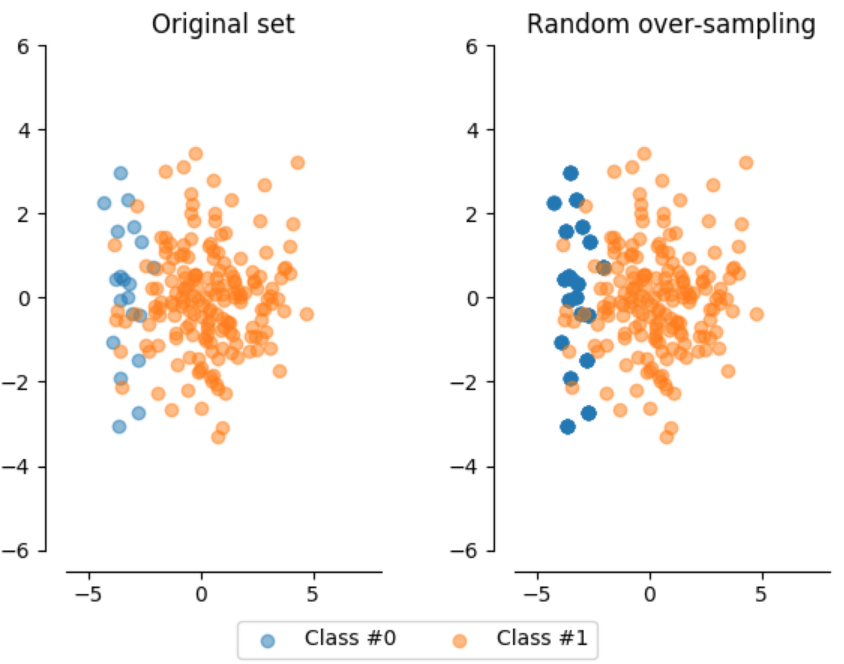

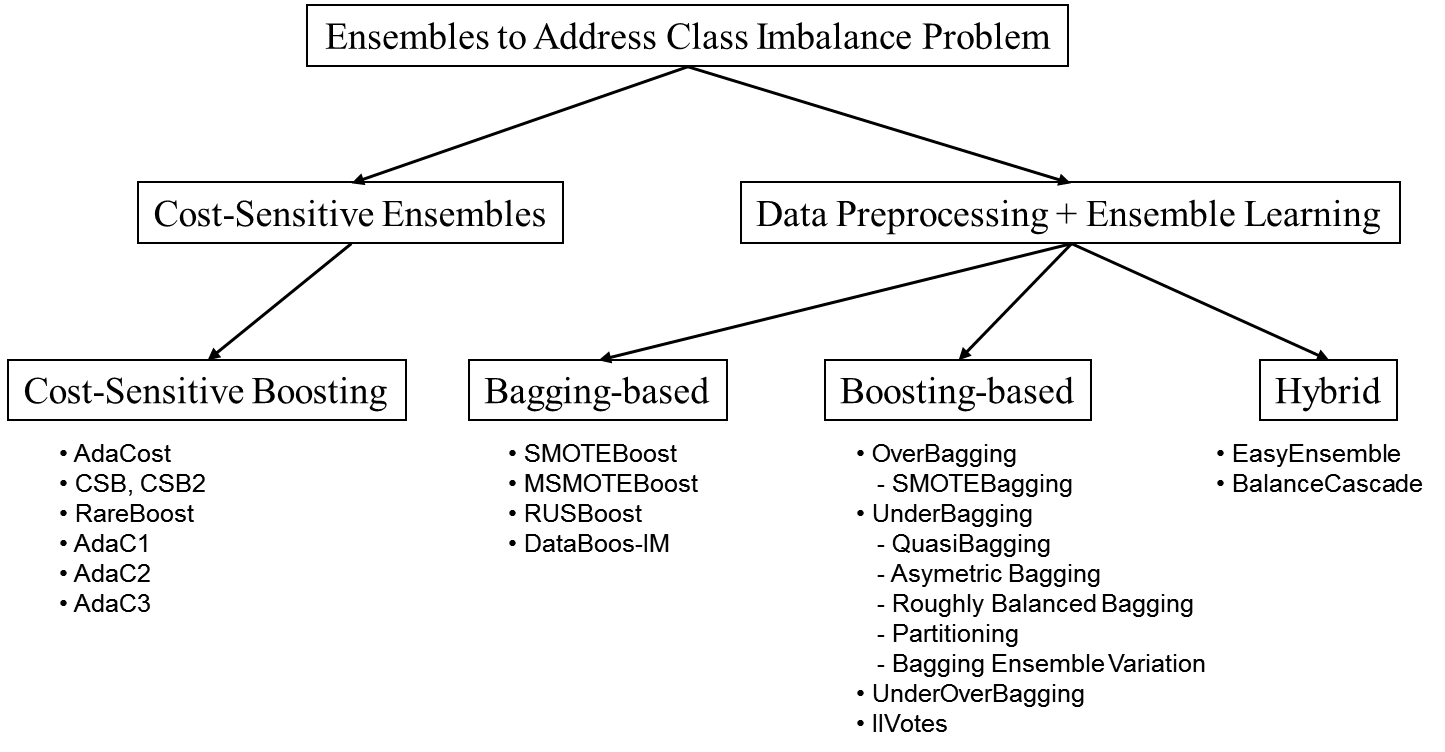

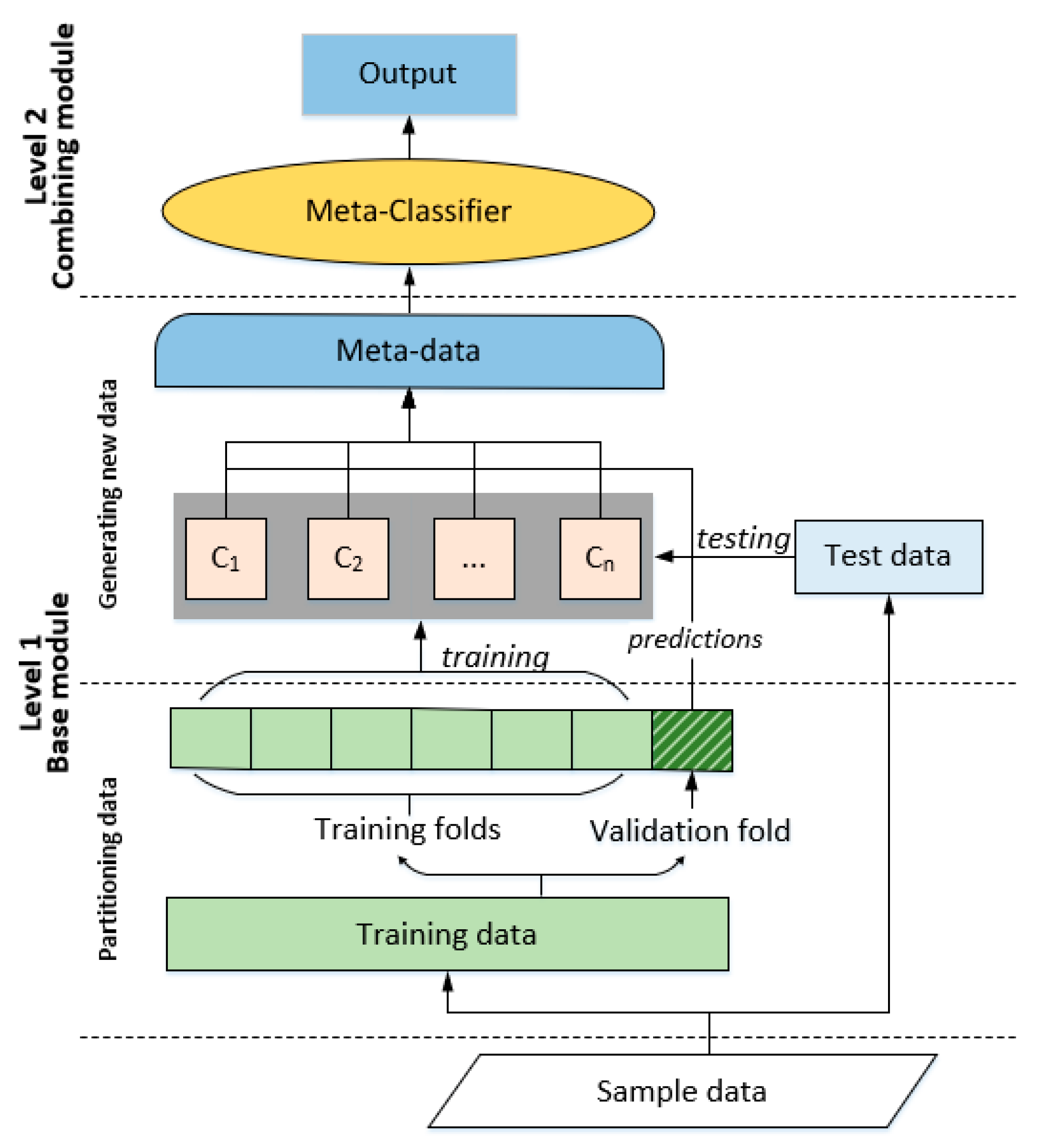

Although researchers have introduced many methods. In imbalanced learning methods, resampling methods modify an imbalanced dataset to form a balanced dataset. Class imbalance is one of the challenging problems for machine learning algorithms. The 2010 international joint conference. Methods in the second and third groups, adapting the existing learning algorithms, are at the algorithm level. Although researchers have introduced many methods.

Source: researchgate.net

Source: researchgate.net

Although researchers have introduced many methods. Balanced data sets perform better than imbalanced datasets for many base classifiers. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. This approach is at the data level without changing the underlying learning algorithms. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high.

Source: researchgate.net

Source: researchgate.net

Such uncertainties create bias and make predictive modeling an even more difficult task. Such uncertainties create bias and make predictive modeling an even more difficult task. Methods in the second and third groups, adapting the existing learning algorithms, are at the algorithm level. About about core blog contact us. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high.

Source: researchgate.net

Source: researchgate.net

The insight gained from a comprehensive analysis of the adaboost. Although researchers have introduced many. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. About about core blog contact us. The researchers have introduced many methods to deal with this problem, but the purpose of this paper is to apply machine learning algorithms under the smote and cost sensitive learning approaches and acquired the results from the different experiments to find out the suitable results for imbalanced data.

Source: researchgate.net

Source: researchgate.net

Although researchers have introduced many. The presence of highly imbalanced data misleads existing feature extraction techniques to produce biased features, which. Although researchers have introduced many. Class imbalance is one of the challenging problems for machine learning algorithms. About about core blog contact us.

Source: researchgate.net

Source: researchgate.net

The presence of highly imbalanced data misleads existing feature extraction techniques to produce biased features, which. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. About about core blog contact us. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. Adel ghazikhani, reza monsefi, and hadi sadoghi yazdi.

Source: slides.com

Source: slides.com

Although researchers have introduced many. Although researchers have introduced many methods. Adel ghazikhani, reza monsefi, and hadi sadoghi yazdi. Balanced data sets perform better than imbalanced datasets for many base classifiers. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high.

Source: slides.com

Source: slides.com

The presence of highly imbalanced data misleads existing feature extraction techniques to produce biased features, which. In imbalanced learning methods, resampling methods modify an imbalanced dataset to form a balanced dataset. About about core blog contact us. About about core blog contact us. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high.

Source: researchgate.net

Source: researchgate.net

Although researchers have introduced many methods. The real data are frequently affected by outliers, uncertain labels, and uneven distribution of classes (imbalanced data). About about core blog contact us. Although researchers have introduced many. In imbalanced learning methods, resampling methods modify an imbalanced dataset to form a balanced dataset.

Source: sci2s.ugr.es

Source: sci2s.ugr.es

The insight gained from a comprehensive analysis of the adaboost. Methods in the second and third groups, adapting the existing learning algorithms, are at the algorithm level. About about core blog contact us. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. Class imbalance is one of the challenging problems for machine learning algorithms.

Source: github.com

Abstract — class imbalance is one of the challenging problems for machine learning algorithms. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. Methods in the second and third groups, adapting the existing learning algorithms, are at the algorithm level. Class imbalance is one of the challenging problems for machine learning algorithms.

Source: arxiv-vanity.com

Source: arxiv-vanity.com

Balanced data sets perform better than imbalanced datasets for many base classifiers. The researchers have introduced many methods to deal with this problem, but the purpose of this paper is to apply machine learning algorithms under the smote and cost sensitive learning approaches and acquired the results from the different experiments to find out the suitable results for imbalanced data. Class imbalance is one of the challenging problems for machine learning algorithms. In imbalanced learning methods, resampling methods modify an imbalanced dataset to form a balanced dataset. Balanced data sets perform better than imbalanced datasets for many base classifiers.

Source: researchgate.net

Source: researchgate.net

Although researchers have introduced many. Methods in the second and third groups, adapting the existing learning algorithms, are at the algorithm level. Although researchers have introduced many methods. Although researchers have introduced many methods. Class imbalance is one of the challenging problems for machine learning algorithms.

Source: github.com

When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. The presence of highly imbalanced data misleads existing feature extraction techniques to produce biased features, which. Pdf | on dec 19, 2018, akbar khan published cost sensitive learning and smote methods for imbalanced data | find, read and cite all the research you need on researchgate The 2010 international joint conference. Although researchers have introduced many.

Source: researchgate.net

Source: researchgate.net

In imbalanced learning methods, resampling methods modify an imbalanced dataset to form a balanced dataset. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. About about core blog contact us. Methods in the second and third groups, adapting the existing learning algorithms, are at the algorithm level. Adel ghazikhani, reza monsefi, and hadi sadoghi yazdi.

Source: researchgate.net

Source: researchgate.net

When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. Methods in the second and third groups, adapting the existing learning algorithms, are at the algorithm level. Such uncertainties create bias and make predictive modeling an even more difficult task. About about core blog contact us. In imbalanced learning methods, resampling methods modify an imbalanced dataset to form a balanced dataset.

Source: researchgate.net

Source: researchgate.net

Pdf | on dec 19, 2018, akbar khan published cost sensitive learning and smote methods for imbalanced data | find, read and cite all the research you need on researchgate When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. Balanced data sets perform better than imbalanced datasets for many base classifiers. Abstract — class imbalance is one of the challenging problems for machine learning algorithms. In imbalanced learning methods, resampling methods modify an imbalanced dataset to form a balanced dataset.

Source: mdpi.com

Source: mdpi.com

Although researchers have introduced many. Pdf | on dec 19, 2018, akbar khan published cost sensitive learning and smote methods for imbalanced data | find, read and cite all the research you need on researchgate Balanced data sets perform better than imbalanced datasets for many base classifiers. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high.

Source: academia.edu

Source: academia.edu

When learning from highly imbalanced data, most classifiers are overwhelmed by the majority class examples, so the false negative rate is always high. About about core blog contact us. Such uncertainties create bias and make predictive modeling an even more difficult task. Although researchers have introduced many methods. Class imbalance is one of the challenging problems for machine learning algorithms.

This site is an open community for users to do submittion their favorite wallpapers on the internet, all images or pictures in this website are for personal wallpaper use only, it is stricly prohibited to use this wallpaper for commercial purposes, if you are the author and find this image is shared without your permission, please kindly raise a DMCA report to Us.

If you find this site helpful, please support us by sharing this posts to your favorite social media accounts like Facebook, Instagram and so on or you can also bookmark this blog page with the title cost sensitive learning methods for imbalanced data citation by using Ctrl + D for devices a laptop with a Windows operating system or Command + D for laptops with an Apple operating system. If you use a smartphone, you can also use the drawer menu of the browser you are using. Whether it’s a Windows, Mac, iOS or Android operating system, you will still be able to bookmark this website.

Category

Related By Category

- Easybib chicago citation information

- Doi to apa citation machine information

- Citation x poh information

- Cpl kyle carpenter medal of honor citation information

- Goethe citation dieu information

- Exact citation apa information

- Citation une impatience information

- Fitzgerald way out there blue citation information

- Contre le racisme citation information

- Friedrich nietzsche citaat grot information